def objective_fn(

prefix: str = None,

return_model: bool = False,

fit_on_all_training_data:bool = False,

epochs:int = 100,

verbosity: int = 0,

**suggestions

)->Union[float, Model]:

"""

This function must build, train and evaluate the ML model.

The output of this function will be minimized by optimization algorithm.

In this example we are considering same number of units and same activation for each

layer. If we want to have (optimize) different number of units for each layer,

willhave to modify the parameter space accordingly. The LSTM function

can be used to have separate number of units and activation function for each layer.

Parameters

----------

prefix : str

prefix to save the results. This argument will only be used after

the optimization is complete

return_model : bool, optional (default=False)

if True, then objective function will return the built model. This

argument will only be used after the optimization is complete

epochs : int, optional

the number of epochs for which to train the model

verbosity : int, optional (default=1)

determines the amount of information to be printed

fit_on_all_training_data : bool, optional

Whether to predict on all the training data (training+validation)

or to training on only training data and evaluate on validation data.

During hpo iterations, we will train the model on training data

and evaluate on validation data. After hpo, we the model is

trained on allt he training data and evaluated on test data.

suggestions : dict

a dictionary with values of hyperparameters at the iteration when

this objective function is called. The objective function will be

called as many times as the number of iterations in optimization

algorithm.

Returns

-------

float or Model

"""

suggestions = jsonize(suggestions)

global ITER

# i) build model

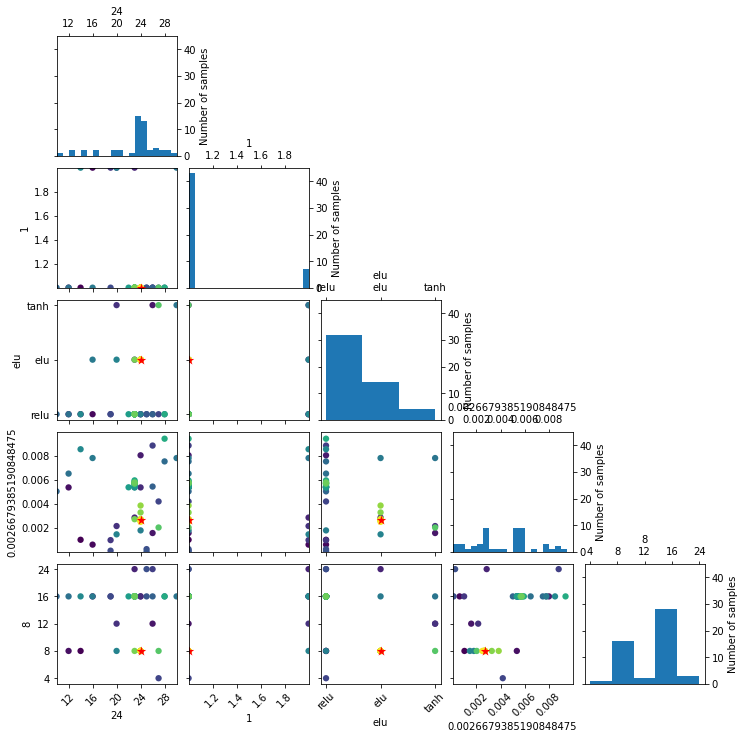

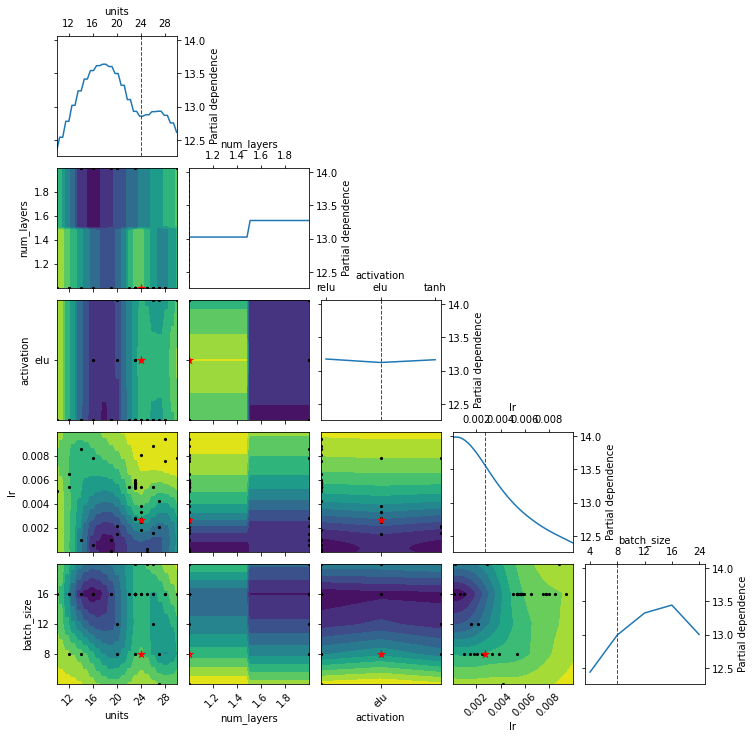

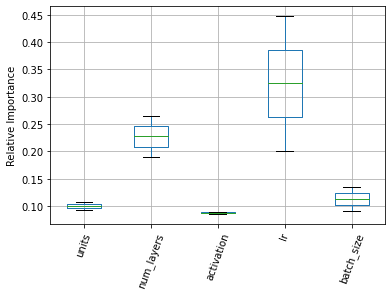

_model = Model(

model=MLP(units=suggestions['units'],

num_layers=suggestions['num_layers'],

activation=suggestions['activation'],

dropout=0.2),

batch_size=suggestions["batch_size"],

lr=suggestions["lr"],

prefix=prefix or PREFIX,

epochs=epochs,

input_features=data.columns.tolist()[0:-1],

output_features=data.columns.tolist()[-1:],

verbosity=verbosity)

SUGGESTIONS[ITER] = suggestions

# ii) train model

if fit_on_all_training_data:

_model.fit(x=TrainX, y=TrainY, validation_data=(TestX, TestY), verbose=0)

prediction = _model.predict(x=TestX)

true = TestY

else:

_model.fit(x=train_x, y=train_y, validation_data=(val_x, val_y), verbose=0)

prediction = _model.predict(x=val_x)

true = val_y

# iii) evaluate model

metrics = RegressionMetrics(true, prediction)

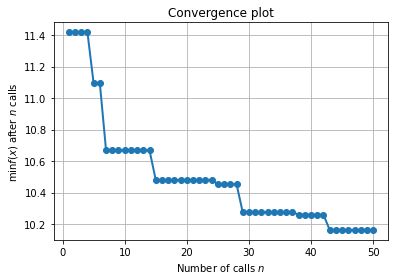

val_score = metrics.rmse()

# here we are evaluating model with respect to mse, therefore

# we don't need to subtract it from 1.0

if not math.isfinite(val_score):

val_score = 9999

print(f"{ITER} {round(val_score, 2)}")

ITER += 1

if return_model:

return _model

return val_score