[1]:

%matplotlib inline

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\numpy\_distributor_init.py:30: UserWarning: loaded more than 1 DLL from .libs:

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\numpy\.libs\libopenblas.EL2C6PLE4ZYW3ECEVIV3OXXGRN2NRFM2.gfortran-win_amd64.dll

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

warnings.warn("loaded more than 1 DLL from .libs:"

Visualizing inside LSTM

[2]:

import tensorflow as tf

tf.compat.v1.disable_eager_execution()

from ai4water.functional import Model

from ai4water.models import LSTM

from ai4water.datasets import busan_beach

from ai4water.utils.utils import get_version_info

from ai4water.postprocessing import Visualize

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\sklearn\experimental\enable_hist_gradient_boosting.py:16: UserWarning: Since version 1.0, it is not needed to import enable_hist_gradient_boosting anymore. HistGradientBoostingClassifier and HistGradientBoostingRegressor are now stable and can be normally imported from sklearn.ensemble.

warnings.warn(

[3]:

for k,v in get_version_info().items():

print(f"{k} version: {v}")

python version: 3.9.7 | packaged by conda-forge | (default, Sep 29 2021, 19:20:16) [MSC v.1916 64 bit (AMD64)]

os version: nt

ai4water version: 1.06

lightgbm version: 3.3.1

tcn version: 3.4.0

catboost version: 0.26

xgboost version: 1.5.0

easy_mpl version: 0.21.2

SeqMetrics version: 1.3.3

tensorflow version: 2.7.0

keras.api._v2.keras version: 2.7.0

numpy version: 1.21.0

pandas version: 1.3.4

matplotlib version: 3.4.3

h5py version: 3.5.0

sklearn version: 1.0.1

shapefile version: 2.3.0

xarray version: 0.20.1

netCDF4 version: 1.5.7

optuna version: 2.10.1

skopt version: 0.9.0

hyperopt version: 0.2.7

plotly version: 5.3.1

lime version: NotDefined

seaborn version: 0.11.2

(1446, 14)

[5]:

input_features = data.columns.tolist()[0:-1]

output_features = data.columns.tolist()[-1:]

[6]:

lookback = 14

[7]:

model = Model(

model=LSTM(

units=13,

input_shape=(lookback, len(input_features)),

dropout=0.2

),

input_features=input_features,

output_features=output_features,

ts_args={'lookback':lookback},

epochs=100

)

building DL model for

regression problem using Model

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Input (InputLayer) [(None, 14, 13)] 0

LSTM_0 (LSTM) (None, 13) 1404

Dropout (Dropout) (None, 13) 0

Flatten (Flatten) (None, 13) 0

Dense_out (Dense) (None, 1) 14

=================================================================

Total params: 1,418

Trainable params: 1,418

Non-trainable params: 0

_________________________________________________________________

[8]:

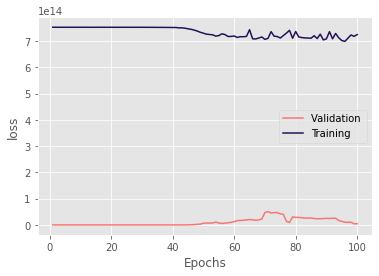

h = model.fit(data=data)

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (121, 14, 13)

target shape: (121, 1)

********** Removing Examples with nan in labels **********

***** Validation *****

input_x shape: (31, 14, 13)

target shape: (31, 1)

Train on 121 samples, validate on 31 samples

Epoch 1/100

121/121 [==============================] - 0s 3ms/sample - loss: 752808851608330.6250 - val_loss: 10488966144.0000

Epoch 2/100

121/121 [==============================] - 0s 141us/sample - loss: 752799654470241.2500 - val_loss: 10436578304.0000

Epoch 3/100

121/121 [==============================] - 0s 83us/sample - loss: 752733421487840.2500 - val_loss: 10572704768.0000

Epoch 4/100

121/121 [==============================] - 0s 132us/sample - loss: 752740284339767.0000 - val_loss: 10431489024.0000

Epoch 5/100

121/121 [==============================] - 0s 124us/sample - loss: 752729785407572.6250 - val_loss: 10312578048.0000

Epoch 6/100

121/121 [==============================] - 0s 124us/sample - loss: 752739842863273.2500 - val_loss: 10255917056.0000

Epoch 7/100

121/121 [==============================] - 0s 132us/sample - loss: 752718517635190.3750 - val_loss: 10203176960.0000

`Model.state_updates` will be removed in a future version. This property should not be used in TensorFlow 2.0, as `updates` are applied automatically.

Epoch 8/100

121/121 [==============================] - 0s 124us/sample - loss: 752704210829582.8750 - val_loss: 10136607744.0000

Epoch 9/100

121/121 [==============================] - 0s 132us/sample - loss: 752710126306092.5000 - val_loss: 10098843648.0000

Epoch 10/100

121/121 [==============================] - 0s 124us/sample - loss: 752769011509163.3750 - val_loss: 10049088512.0000

Epoch 11/100

121/121 [==============================] - 0s 91us/sample - loss: 752757978409823.2500 - val_loss: 10234416128.0000

Epoch 12/100

121/121 [==============================] - 0s 83us/sample - loss: 752708875172940.2500 - val_loss: 10217053184.0000

Epoch 13/100

121/121 [==============================] - 0s 91us/sample - loss: 752705627603079.3750 - val_loss: 10082214912.0000

Epoch 14/100

121/121 [==============================] - 0s 132us/sample - loss: 752669739335544.6250 - val_loss: 9811524608.0000

Epoch 15/100

121/121 [==============================] - 0s 132us/sample - loss: 752674242284857.1250 - val_loss: 9742213120.0000

Epoch 16/100

121/121 [==============================] - 0s 132us/sample - loss: 752707231005772.2500 - val_loss: 9623659520.0000

Epoch 17/100

121/121 [==============================] - 0s 132us/sample - loss: 752705939212135.6250 - val_loss: 9482016768.0000

Epoch 18/100

121/121 [==============================] - 0s 91us/sample - loss: 752677758576149.1250 - val_loss: 9499147264.0000

Epoch 19/100

121/121 [==============================] - 0s 83us/sample - loss: 752686638290351.6250 - val_loss: 10132308992.0000

Epoch 20/100

121/121 [==============================] - 0s 91us/sample - loss: 752695066276280.1250 - val_loss: 10144599040.0000

Epoch 21/100

121/121 [==============================] - 0s 91us/sample - loss: 752650241714768.3750 - val_loss: 9603352576.0000

Epoch 22/100

121/121 [==============================] - 0s 132us/sample - loss: 752693710233532.3750 - val_loss: 9429089280.0000

Epoch 23/100

121/121 [==============================] - 0s 132us/sample - loss: 752661277795184.1250 - val_loss: 9335957504.0000

Epoch 24/100

121/121 [==============================] - 0s 91us/sample - loss: 752671014126651.2500 - val_loss: 9371130880.0000

Epoch 25/100

121/121 [==============================] - 0s 91us/sample - loss: 752737018554147.8750 - val_loss: 9357902848.0000

Epoch 26/100

121/121 [==============================] - 0s 132us/sample - loss: 752637075343884.7500 - val_loss: 9117692928.0000

Epoch 27/100

121/121 [==============================] - 0s 132us/sample - loss: 752640447980264.7500 - val_loss: 8701662208.0000

Epoch 28/100

121/121 [==============================] - 0s 414us/sample - loss: 752621537700703.2500 - val_loss: 8535814144.0000

Epoch 29/100

121/121 [==============================] - 0s 132us/sample - loss: 752664354819791.3750 - val_loss: 8333308928.0000

Epoch 30/100

121/121 [==============================] - 0s 132us/sample - loss: 752728890946246.8750 - val_loss: 8117473280.0000

Epoch 31/100

121/121 [==============================] - 0s 132us/sample - loss: 752561550140949.1250 - val_loss: 7966671872.0000

Epoch 32/100

121/121 [==============================] - 0s 132us/sample - loss: 752552801724060.6250 - val_loss: 7785810944.0000

Epoch 33/100

121/121 [==============================] - 0s 132us/sample - loss: 752469345334906.7500 - val_loss: 7617901056.0000

Epoch 34/100

121/121 [==============================] - 0s 132us/sample - loss: 752465908917375.0000 - val_loss: 7293483008.0000

Epoch 35/100

121/121 [==============================] - 0s 132us/sample - loss: 752287875756607.5000 - val_loss: 7060156416.0000

Epoch 36/100

121/121 [==============================] - 0s 124us/sample - loss: 752359961387617.2500 - val_loss: 6954109952.0000

Epoch 37/100

121/121 [==============================] - 0s 132us/sample - loss: 752203100900123.5000 - val_loss: 6423758848.0000

Epoch 38/100

121/121 [==============================] - 0s 132us/sample - loss: 752178094251693.5000 - val_loss: 6084926976.0000

Epoch 39/100

121/121 [==============================] - 0s 141us/sample - loss: 752010086933360.1250 - val_loss: 4863798272.0000

Epoch 40/100

121/121 [==============================] - 0s 91us/sample - loss: 751800095359923.7500 - val_loss: 5168786944.0000

Epoch 41/100

121/121 [==============================] - 0s 91us/sample - loss: 751948886387822.0000 - val_loss: 7834909696.0000

Epoch 42/100

121/121 [==============================] - 0s 83us/sample - loss: 750503142972153.7500 - val_loss: 14509023232.0000

Epoch 43/100

121/121 [==============================] - 0s 82us/sample - loss: 750576965495647.2500 - val_loss: 32540444672.0000

Epoch 44/100

121/121 [==============================] - 0s 91us/sample - loss: 749076966495934.3750 - val_loss: 81642332160.0000

Epoch 45/100

121/121 [==============================] - 0s 91us/sample - loss: 746833996614292.1250 - val_loss: 183883776000.0000

Epoch 46/100

121/121 [==============================] - 0s 83us/sample - loss: 744862359811435.8750 - val_loss: 624000696320.0000

Epoch 47/100

121/121 [==============================] - 0s 83us/sample - loss: 742071068715194.2500 - val_loss: 1134083833856.0000

Epoch 48/100

121/121 [==============================] - 0s 83us/sample - loss: 738194007129028.7500 - val_loss: 2545008246784.0000

Epoch 49/100

121/121 [==============================] - 0s 82us/sample - loss: 733557622880814.5000 - val_loss: 2949091950592.0000

Epoch 50/100

121/121 [==============================] - 0s 83us/sample - loss: 730166508321191.1250 - val_loss: 6661849743360.0000

Epoch 51/100

121/121 [==============================] - 0s 91us/sample - loss: 726465431454914.6250 - val_loss: 7214866628608.0000

Epoch 52/100

121/121 [==============================] - 0s 82us/sample - loss: 725136615979566.5000 - val_loss: 6913732378624.0000

Epoch 53/100

121/121 [==============================] - 0s 91us/sample - loss: 724051351193583.1250 - val_loss: 7583505580032.0000

Epoch 54/100

121/121 [==============================] - 0s 83us/sample - loss: 718930547481743.8750 - val_loss: 10768899637248.0000

Epoch 55/100

121/121 [==============================] - 0s 83us/sample - loss: 721047158417865.0000 - val_loss: 7541499101184.0000

Epoch 56/100

121/121 [==============================] - 0s 91us/sample - loss: 727782427104281.5000 - val_loss: 5533039329280.0000

Epoch 57/100

121/121 [==============================] - 0s 91us/sample - loss: 724851009458954.6250 - val_loss: 6803712638976.0000

Epoch 58/100

121/121 [==============================] - 0s 82us/sample - loss: 717575583952659.0000 - val_loss: 8264783233024.0000

Epoch 59/100

121/121 [==============================] - 0s 83us/sample - loss: 717898769218636.2500 - val_loss: 9542710591488.0000

Epoch 60/100

121/121 [==============================] - 0s 91us/sample - loss: 719421051437597.6250 - val_loss: 12448743555072.0000

Epoch 61/100

121/121 [==============================] - 0s 90us/sample - loss: 714152258784839.8750 - val_loss: 16522991894528.0000

Epoch 62/100

121/121 [==============================] - 0s 91us/sample - loss: 716866605391533.5000 - val_loss: 16844900532224.0000

Epoch 63/100

121/121 [==============================] - 0s 99us/sample - loss: 716648930407787.8750 - val_loss: 18011597570048.0000

Epoch 64/100

121/121 [==============================] - 0s 99us/sample - loss: 717873354821462.7500 - val_loss: 19021799882752.0000

Epoch 65/100

121/121 [==============================] - 0s 91us/sample - loss: 743360632602488.6250 - val_loss: 20562942361600.0000

Epoch 66/100

121/121 [==============================] - 0s 92us/sample - loss: 708742982252560.8750 - val_loss: 19376503783424.0000

Epoch 67/100

121/121 [==============================] - 0s 91us/sample - loss: 708263152627068.8750 - val_loss: 17819521515520.0000

Epoch 68/100

121/121 [==============================] - 0s 91us/sample - loss: 711715854700103.8750 - val_loss: 19164710305792.0000

Epoch 69/100

121/121 [==============================] - 0s 91us/sample - loss: 715768483672910.2500 - val_loss: 22459285766144.0000

Epoch 70/100

121/121 [==============================] - 0s 91us/sample - loss: 706586433188415.5000 - val_loss: 47252202586112.0000

Epoch 71/100

121/121 [==============================] - 0s 91us/sample - loss: 710573745200948.8750 - val_loss: 50736712908800.0000

Epoch 72/100

121/121 [==============================] - 0s 91us/sample - loss: 736041725925189.7500 - val_loss: 45571217817600.0000

Epoch 73/100

121/121 [==============================] - 0s 91us/sample - loss: 718597931479209.2500 - val_loss: 46810152304640.0000

Epoch 74/100

121/121 [==============================] - 0s 91us/sample - loss: 717560062012119.7500 - val_loss: 47032974704640.0000

Epoch 75/100

121/121 [==============================] - 0s 91us/sample - loss: 711767566412774.5000 - val_loss: 42235647229952.0000

Epoch 76/100

121/121 [==============================] - 0s 91us/sample - loss: 721031219785355.6250 - val_loss: 39964234481664.0000

Epoch 77/100

121/121 [==============================] - 0s 91us/sample - loss: 730650694303337.8750 - val_loss: 13622182936576.0000

Epoch 78/100

121/121 [==============================] - 0s 83us/sample - loss: 741011147946136.3750 - val_loss: 9114667188224.0000

Epoch 79/100

121/121 [==============================] - 0s 91us/sample - loss: 710986772527933.3750 - val_loss: 30521157484544.0000

Epoch 80/100

121/121 [==============================] - 0s 99us/sample - loss: 736547273670926.8750 - val_loss: 28595615432704.0000

Epoch 81/100

121/121 [==============================] - 0s 91us/sample - loss: 715928079366567.1250 - val_loss: 28626238046208.0000

Epoch 82/100

121/121 [==============================] - 0s 91us/sample - loss: 713436734821672.2500 - val_loss: 27361896562688.0000

Epoch 83/100

121/121 [==============================] - 0s 91us/sample - loss: 712160043452669.8750 - val_loss: 26244563337216.0000

Epoch 84/100

121/121 [==============================] - 0s 91us/sample - loss: 711783521579854.2500 - val_loss: 26309426151424.0000

Epoch 85/100

121/121 [==============================] - 0s 83us/sample - loss: 710872945807461.5000 - val_loss: 26197251588096.0000

Epoch 86/100

121/121 [==============================] - 0s 99us/sample - loss: 720539686387847.3750 - val_loss: 24467099090944.0000

Epoch 87/100

121/121 [==============================] - 0s 91us/sample - loss: 709697993937403.7500 - val_loss: 23493441748992.0000

Epoch 88/100

121/121 [==============================] - 0s 82us/sample - loss: 726522066881849.1250 - val_loss: 23687310868480.0000

Epoch 89/100

121/121 [==============================] - 0s 91us/sample - loss: 704586689600520.5000 - val_loss: 24182951772160.0000

Epoch 90/100

121/121 [==============================] - 0s 99us/sample - loss: 708604452693389.7500 - val_loss: 25029125341184.0000

Epoch 91/100

121/121 [==============================] - 0s 91us/sample - loss: 736013906909268.6250 - val_loss: 24599494393856.0000

Epoch 92/100

121/121 [==============================] - 0s 83us/sample - loss: 708833295670145.0000 - val_loss: 25555661488128.0000

Epoch 93/100

121/121 [==============================] - 0s 91us/sample - loss: 729197566902136.6250 - val_loss: 25436948004864.0000

Epoch 94/100

121/121 [==============================] - 0s 91us/sample - loss: 712706560847905.8750 - val_loss: 16725047246848.0000

Epoch 95/100

121/121 [==============================] - 0s 83us/sample - loss: 702254782266985.8750 - val_loss: 13382452248576.0000

Epoch 96/100

121/121 [==============================] - 0s 99us/sample - loss: 698993693367169.0000 - val_loss: 10446747729920.0000

Epoch 97/100

121/121 [==============================] - 0s 99us/sample - loss: 711066420142418.5000 - val_loss: 9971104219136.0000

Epoch 98/100

121/121 [==============================] - 0s 99us/sample - loss: 723413766978907.0000 - val_loss: 10200312446976.0000

Epoch 99/100

121/121 [==============================] - 0s 99us/sample - loss: 718215736355273.0000 - val_loss: 4301657473024.0000

Epoch 100/100

121/121 [==============================] - 0s 91us/sample - loss: 724765087373667.3750 - val_loss: 4585979641856.0000

********** Successfully loaded weights from weights_039_4863798272.00000.hdf5 file **********

[9]:

visualizer = Visualize(model, verbosity=0)

gradients

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (121, 14, 13)

target shape: (121, 1)

gradients of activations

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (121, 14, 13)

target shape: (121, 1)

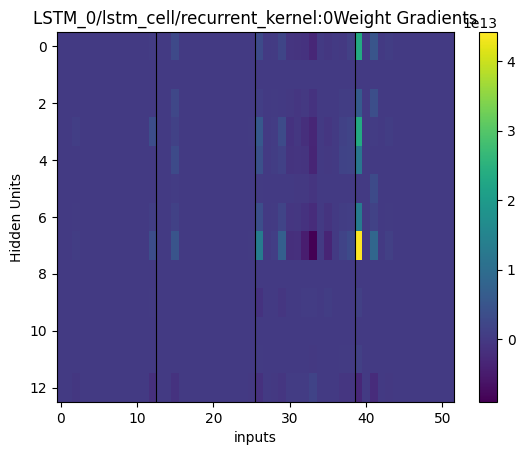

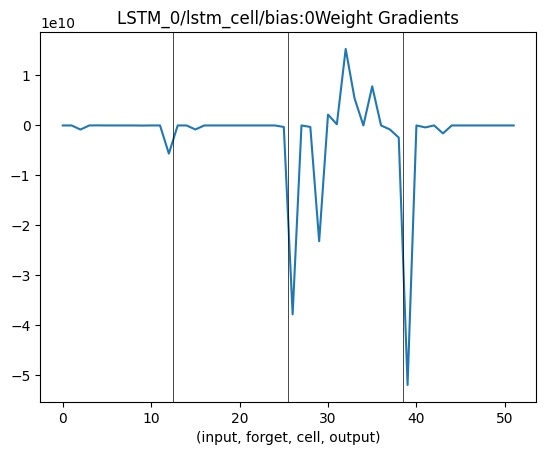

[12]:

x, y = model.all_data(data=data)

x.shape, y.shape

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (121, 14, 13)

target shape: (121, 1)

********** Removing Examples with nan in labels **********

***** Validation *****

input_x shape: (31, 14, 13)

target shape: (31, 1)

********** Removing Examples with nan in labels **********

***** Test *****

input_x shape: (66, 14, 13)

target shape: (66, 1)

[12]:

((218, 14, 13), (218, 1))

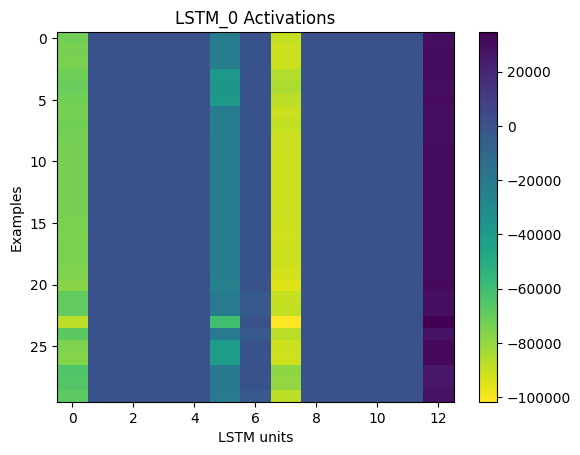

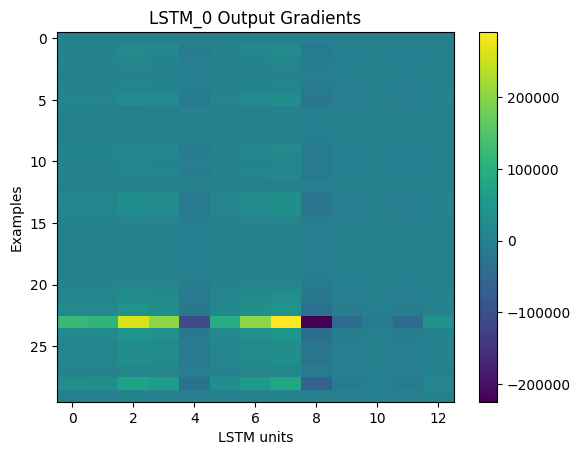

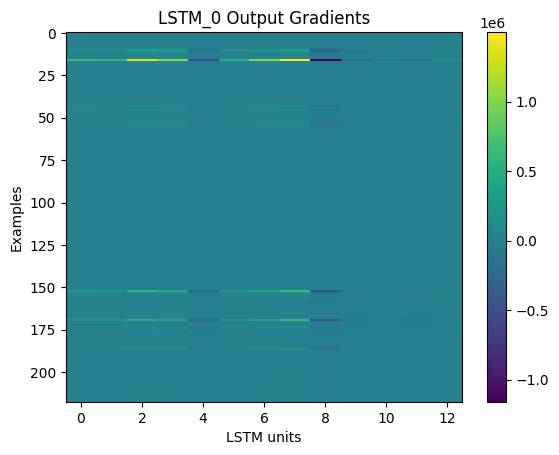

[13]:

_ = visualizer.activation_gradients("LSTM_0", x=x, y=y)

weights

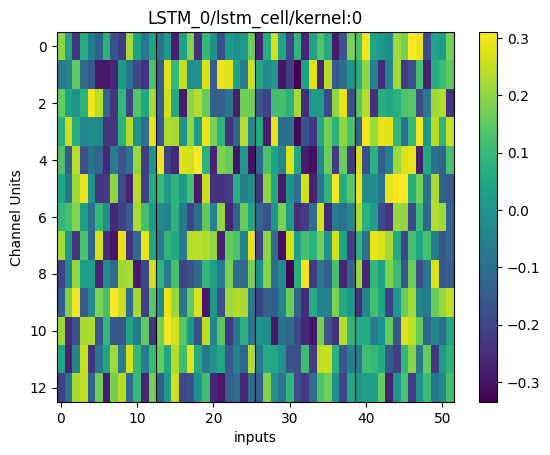

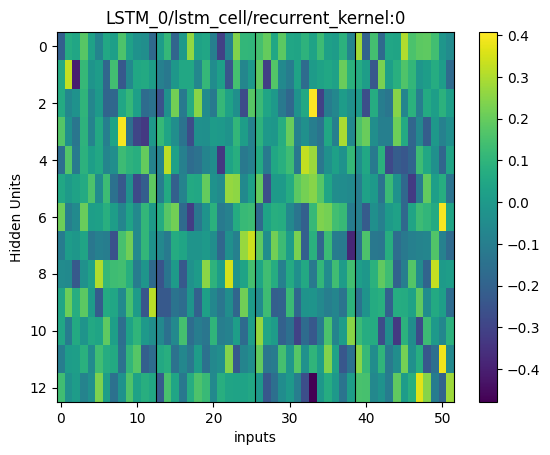

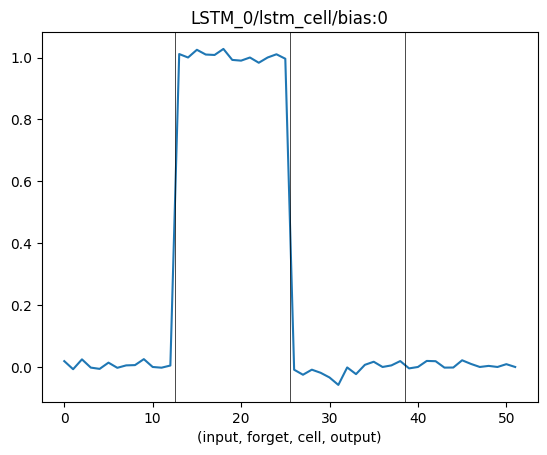

[14]:

_ = visualizer.weights(layer_names="LSTM_0", data=data)

gradients of weights

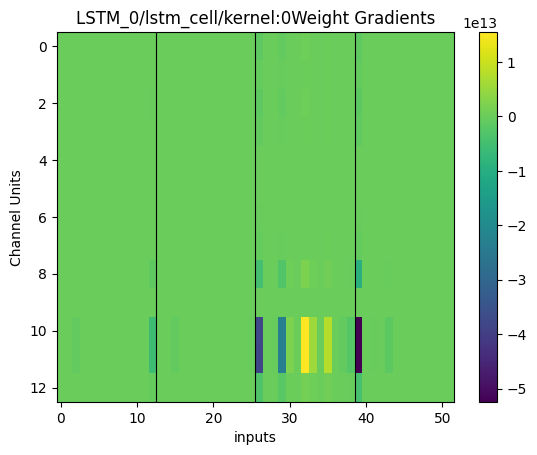

[15]:

_ = visualizer.weight_gradients(layer_names="LSTM_0", data=data)

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (121, 14, 13)

target shape: (121, 1)

[ ]: