[1]:

%matplotlib inline

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\numpy\_distributor_init.py:30: UserWarning: loaded more than 1 DLL from .libs:

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\numpy\.libs\libopenblas.EL2C6PLE4ZYW3ECEVIV3OXXGRN2NRFM2.gfortran-win_amd64.dll

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

warnings.warn("loaded more than 1 DLL from .libs:"

[ ]:

try:

import ai4water

except (ImportError, ModuleNotFoundError):

!pip install ai4water[tf2]

LSTM for time series forecasting

[2]:

from ai4water import Model

from ai4water.datasets import MtropicsLaos

from ai4water.utils.utils import get_version_info

D:\C\Anaconda3\envs\tfcpu27_py39\lib\site-packages\sklearn\experimental\enable_hist_gradient_boosting.py:16: UserWarning: Since version 1.0, it is not needed to import enable_hist_gradient_boosting anymore. HistGradientBoostingClassifier and HistGradientBoostingRegressor are now stable and can be normally imported from sklearn.ensemble.

warnings.warn(

[3]:

for k,v in get_version_info().items():

print(f"{k} version: {v}")

python version: 3.9.7 | packaged by conda-forge | (default, Sep 29 2021, 19:20:16) [MSC v.1916 64 bit (AMD64)]

os version: nt

ai4water version: 1.06

lightgbm version: 3.3.1

tcn version: 3.4.0

catboost version: 0.26

xgboost version: 1.5.0

easy_mpl version: 0.21.2

SeqMetrics version: 1.3.3

tensorflow version: 2.7.0

keras.api._v2.keras version: 2.7.0

numpy version: 1.21.0

pandas version: 1.3.4

matplotlib version: 3.4.3

h5py version: 3.5.0

sklearn version: 1.0.1

shapefile version: 2.3.0

xarray version: 0.20.1

netCDF4 version: 1.5.7

optuna version: 2.10.1

skopt version: 0.9.0

hyperopt version: 0.2.7

plotly version: 5.3.1

lime version: NotDefined

seaborn version: 0.11.2

[4]:

dataset = MtropicsLaos(save_as_nc=True, # if set to True, then netcdf must be installed

convert_to_csv=False,

path="F:\\data\\MtropicsLaos",

)

lookback = 20

data = dataset.make_regression(lookback_steps=lookback)

data.shape

Not downloading the data since the directory

F:\data\MtropicsLaos already exists.

Use overwrite=True to remove previously saved files and download again

Value based partial slicing on non-monotonic DatetimeIndexes with non-existing keys is deprecated and will raise a KeyError in a future Version.

[4]:

(4071, 9)

[5]:

inputs = data.columns.tolist()[0:-1]

inputs

[5]:

['air_temp',

'rel_hum',

'wind_speed',

'sol_rad',

'water_level',

'pcp',

'susp_pm',

'Ecoli_source']

[6]:

layers ={

"Input": {'config': {'shape': (lookback, len(inputs))}},

"LSTM": {'config': {'units': 14, 'activation': "elu"}},

"Dense": 1

}

model = Model(

input_features=inputs,

output_features=data.columns.tolist()[-1:],

model={'layers':layers},

ts_args={"lookback": lookback},

lr=0.009919,

batch_size=8,

split_random=True,

train_fraction=1.0,

x_transformation="zscore",

y_transformation={"method": "log", "replace_zeros": True, "treat_negatives": True},

epochs=200

)

building DL model for

regression problem using Model

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

Input (InputLayer) [(None, 20, 8)] 0

LSTM (LSTM) (None, 14) 1288

Dense (Dense) (None, 1) 15

=================================================================

Total params: 1,303

Trainable params: 1,303

Non-trainable params: 0

_________________________________________________________________

[7]:

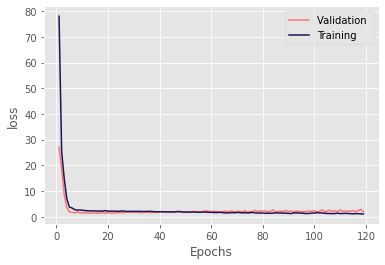

h = model.fit(data=data)

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (206, 20, 8)

target shape: (206, 1)

********** Removing Examples with nan in labels **********

***** Validation *****

input_x shape: (52, 20, 8)

target shape: (52, 1)

Epoch 1/200

assigning name Input to IteratorGetNext:0 with shape (None, 20, 8)

assigning name Input to IteratorGetNext:0 with shape (None, 20, 8)

18/26 [===================>..........] - ETA: 0s - loss: 100.7893assigning name Input to IteratorGetNext:0 with shape (None, 20, 8)

26/26 [==============================] - 2s 11ms/step - loss: 78.0383 - val_loss: 26.9827

Epoch 2/200

26/26 [==============================] - 0s 5ms/step - loss: 25.4774 - val_loss: 18.8905

Epoch 3/200

26/26 [==============================] - 0s 5ms/step - loss: 14.9665 - val_loss: 8.7782

Epoch 4/200

26/26 [==============================] - 0s 5ms/step - loss: 7.0218 - val_loss: 3.4659

Epoch 5/200

26/26 [==============================] - 0s 5ms/step - loss: 3.7423 - val_loss: 1.9051

Epoch 6/200

26/26 [==============================] - 0s 4ms/step - loss: 3.4310 - val_loss: 1.7568

Epoch 7/200

26/26 [==============================] - 0s 4ms/step - loss: 2.7946 - val_loss: 1.5086

Epoch 8/200

26/26 [==============================] - 0s 4ms/step - loss: 2.5614 - val_loss: 1.8149

Epoch 9/200

26/26 [==============================] - 0s 4ms/step - loss: 2.5903 - val_loss: 1.5388

Epoch 10/200

26/26 [==============================] - 0s 4ms/step - loss: 2.5322 - val_loss: 1.4675

Epoch 11/200

26/26 [==============================] - 0s 4ms/step - loss: 2.3507 - val_loss: 1.4359

Epoch 12/200

26/26 [==============================] - 0s 4ms/step - loss: 2.3415 - val_loss: 1.5000

Epoch 13/200

26/26 [==============================] - 0s 4ms/step - loss: 2.2680 - val_loss: 1.3931

Epoch 14/200

26/26 [==============================] - 0s 4ms/step - loss: 2.2826 - val_loss: 1.5056

Epoch 15/200

26/26 [==============================] - 0s 4ms/step - loss: 2.2283 - val_loss: 1.3600

Epoch 16/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1756 - val_loss: 1.3777

Epoch 17/200

26/26 [==============================] - 0s 4ms/step - loss: 2.2203 - val_loss: 1.4283

Epoch 18/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1854 - val_loss: 1.5904

Epoch 19/200

26/26 [==============================] - 0s 4ms/step - loss: 2.3765 - val_loss: 1.3355

Epoch 20/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1578 - val_loss: 1.5137

Epoch 21/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1805 - val_loss: 1.4412

Epoch 22/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1697 - val_loss: 1.4324

Epoch 23/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1543 - val_loss: 1.4875

Epoch 24/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0792 - val_loss: 1.5516

Epoch 25/200

26/26 [==============================] - 0s 4ms/step - loss: 2.2077 - val_loss: 1.6654

Epoch 26/200

26/26 [==============================] - 0s 4ms/step - loss: 2.2215 - val_loss: 1.5029

Epoch 27/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0324 - val_loss: 1.8807

Epoch 28/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0951 - val_loss: 1.7207

Epoch 29/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0782 - val_loss: 1.7571

Epoch 30/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0621 - val_loss: 1.7785

Epoch 31/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0790 - val_loss: 1.7358

Epoch 32/200

26/26 [==============================] - 0s 4ms/step - loss: 2.1032 - val_loss: 1.6230

Epoch 33/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0606 - val_loss: 1.5836

Epoch 34/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0150 - val_loss: 1.7484

Epoch 35/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0663 - val_loss: 1.7289

Epoch 36/200

26/26 [==============================] - 0s 5ms/step - loss: 2.0914 - val_loss: 1.6749

Epoch 37/200

26/26 [==============================] - 0s 6ms/step - loss: 2.0429 - val_loss: 1.6325

Epoch 38/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9600 - val_loss: 1.8131

Epoch 39/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9286 - val_loss: 1.7169

Epoch 40/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9502 - val_loss: 1.8640

Epoch 41/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9467 - val_loss: 1.9784

Epoch 42/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9145 - val_loss: 1.8156

Epoch 43/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8927 - val_loss: 1.8794

Epoch 44/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9059 - val_loss: 1.7426

Epoch 45/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8567 - val_loss: 1.9001

Epoch 46/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8493 - val_loss: 1.9401

Epoch 47/200

26/26 [==============================] - 0s 4ms/step - loss: 2.0169 - val_loss: 1.9715

Epoch 48/200

26/26 [==============================] - 0s 4ms/step - loss: 1.9213 - val_loss: 2.1020

Epoch 49/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8113 - val_loss: 1.9011

Epoch 50/200

26/26 [==============================] - 0s 4ms/step - loss: 1.7978 - val_loss: 1.8903

Epoch 51/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8025 - val_loss: 1.9471

Epoch 52/200

26/26 [==============================] - 0s 4ms/step - loss: 1.7739 - val_loss: 1.7793

Epoch 53/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8192 - val_loss: 2.1097

Epoch 54/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8179 - val_loss: 1.9036

Epoch 55/200

26/26 [==============================] - 0s 4ms/step - loss: 1.7332 - val_loss: 1.8925

Epoch 56/200

26/26 [==============================] - 0s 4ms/step - loss: 1.7616 - val_loss: 1.8577

Epoch 57/200

26/26 [==============================] - 0s 4ms/step - loss: 1.8437 - val_loss: 2.0430

Epoch 58/200

26/26 [==============================] - 0s 4ms/step - loss: 1.7640 - val_loss: 2.3366

Epoch 59/200

26/26 [==============================] - 0s 4ms/step - loss: 1.7109 - val_loss: 2.0880

Epoch 60/200

26/26 [==============================] - 0s 5ms/step - loss: 1.7181 - val_loss: 1.9773

Epoch 61/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6474 - val_loss: 2.1006

Epoch 62/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6376 - val_loss: 1.9836

Epoch 63/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6859 - val_loss: 1.9822

Epoch 64/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6723 - val_loss: 1.8385

Epoch 65/200

26/26 [==============================] - 0s 4ms/step - loss: 1.5259 - val_loss: 2.1795

Epoch 66/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4792 - val_loss: 2.1116

Epoch 67/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4955 - val_loss: 1.9483

Epoch 68/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6099 - val_loss: 2.2973

Epoch 69/200

26/26 [==============================] - 0s 4ms/step - loss: 1.5268 - val_loss: 1.8486

Epoch 70/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6557 - val_loss: 2.0699

Epoch 71/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6293 - val_loss: 2.0666

Epoch 72/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4971 - val_loss: 1.8501

Epoch 73/200

26/26 [==============================] - 0s 4ms/step - loss: 1.5565 - val_loss: 2.3651

Epoch 74/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4828 - val_loss: 1.7770

Epoch 75/200

26/26 [==============================] - 0s 4ms/step - loss: 1.5315 - val_loss: 2.0530

Epoch 76/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6978 - val_loss: 1.9833

Epoch 77/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4611 - val_loss: 2.4645

Epoch 78/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4407 - val_loss: 2.0058

Epoch 79/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4570 - val_loss: 2.2919

Epoch 80/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4043 - val_loss: 2.1670

Epoch 81/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3432 - val_loss: 2.2628

Epoch 82/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3707 - val_loss: 1.9328

Epoch 83/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3362 - val_loss: 2.2226

Epoch 84/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4187 - val_loss: 2.6026

Epoch 85/200

26/26 [==============================] - 0s 4ms/step - loss: 1.5127 - val_loss: 1.9446

Epoch 86/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4494 - val_loss: 2.0469

Epoch 87/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4525 - val_loss: 2.1871

Epoch 88/200

26/26 [==============================] - 0s 5ms/step - loss: 1.3628 - val_loss: 2.0229

Epoch 89/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3952 - val_loss: 2.4309

Epoch 90/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3025 - val_loss: 1.9898

Epoch 91/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2165 - val_loss: 2.2065

Epoch 92/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4830 - val_loss: 1.8223

Epoch 93/200

26/26 [==============================] - 0s 4ms/step - loss: 1.5106 - val_loss: 2.2720

Epoch 94/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4248 - val_loss: 2.0414

Epoch 95/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4237 - val_loss: 1.9304

Epoch 96/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2869 - val_loss: 2.0285

Epoch 97/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2413 - val_loss: 2.0274

Epoch 98/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2791 - val_loss: 2.2272

Epoch 99/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4163 - val_loss: 2.1095

Epoch 100/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3964 - val_loss: 2.2720

Epoch 101/200

26/26 [==============================] - 0s 4ms/step - loss: 1.6256 - val_loss: 1.8829

Epoch 102/200

26/26 [==============================] - 0s 5ms/step - loss: 1.4604 - val_loss: 2.1002

Epoch 103/200

26/26 [==============================] - 0s 4ms/step - loss: 1.4628 - val_loss: 2.6744

Epoch 104/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3415 - val_loss: 1.9412

Epoch 105/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2682 - val_loss: 2.2500

Epoch 106/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2563 - val_loss: 2.5064

Epoch 107/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2008 - val_loss: 2.0823

Epoch 108/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2272 - val_loss: 2.3500

Epoch 109/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3757 - val_loss: 1.9903

Epoch 110/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2150 - val_loss: 2.6523

Epoch 111/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2578 - val_loss: 2.1164

Epoch 112/200

26/26 [==============================] - 0s 4ms/step - loss: 1.3046 - val_loss: 2.2229

Epoch 113/200

26/26 [==============================] - 0s 4ms/step - loss: 1.2665 - val_loss: 2.1473

Epoch 114/200

26/26 [==============================] - 0s 4ms/step - loss: 1.1936 - val_loss: 2.1517

Epoch 115/200

26/26 [==============================] - 0s 4ms/step - loss: 1.1240 - val_loss: 2.3406

Epoch 116/200

26/26 [==============================] - 0s 5ms/step - loss: 1.2132 - val_loss: 1.9678

Epoch 117/200

26/26 [==============================] - 0s 4ms/step - loss: 1.1991 - val_loss: 2.4041

Epoch 118/200

26/26 [==============================] - 0s 4ms/step - loss: 1.0964 - val_loss: 2.8420

Epoch 119/200

26/26 [==============================] - 0s 4ms/step - loss: 1.0876 - val_loss: 1.9345

********** Successfully loaded weights from weights_019_1.33551.hdf5 file **********

[8]:

type(h)

[8]:

keras.callbacks.History

[9]:

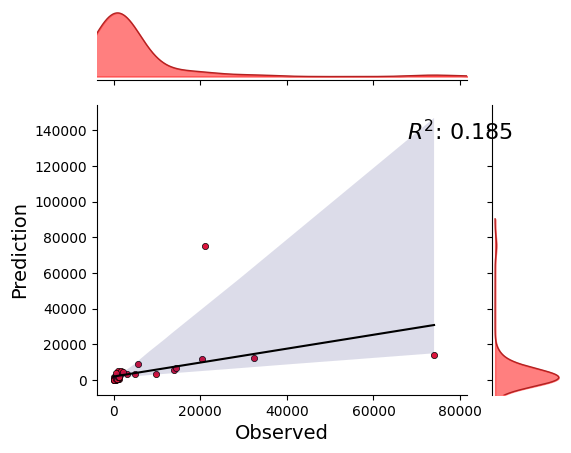

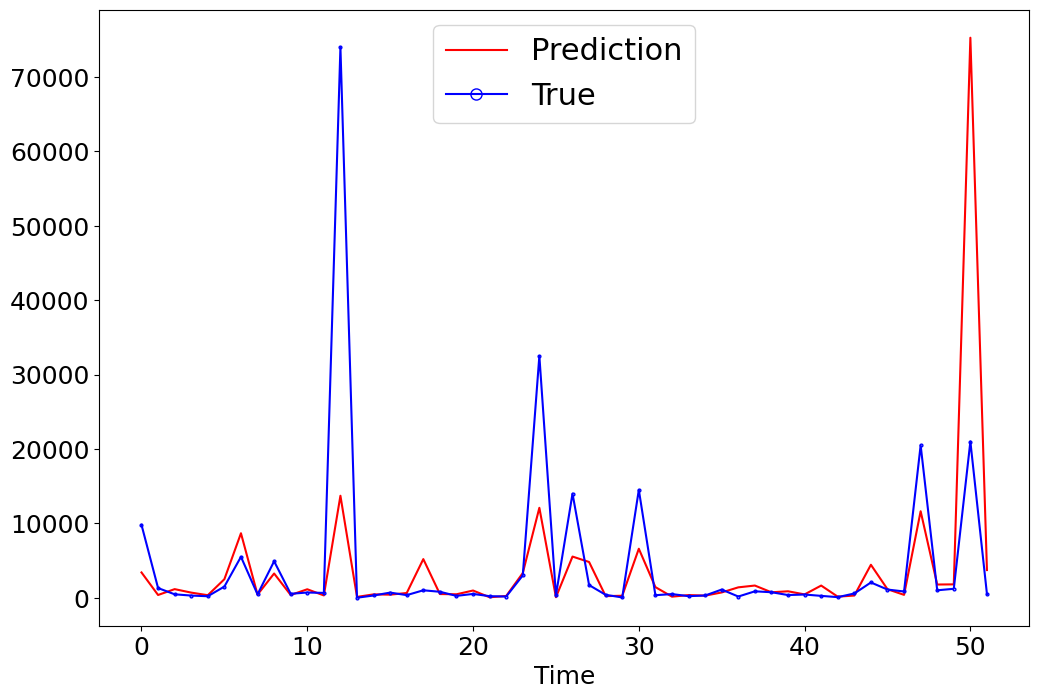

p = model.predict(data=data)

***** Test *****

input_x shape: (0,)

target shape: (0,)

********** Removing Examples with nan in labels **********

***** Validation *****

input_x shape: (52, 20, 8)

target shape: (52, 1)

assigning name Input to IteratorGetNext:0 with shape (None, 20, 8)

No test data found. using validation data instead

2/2 [==============================] - 0s 2ms/step

[10]:

p = model.predict_on_training_data(data=data)

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (206, 20, 8)

target shape: (206, 1)

7/7 [==============================] - 0s 1ms/step

[13]:

train_x, train_y = model.training_data(data=data)

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (206, 20, 8)

target shape: (206, 1)

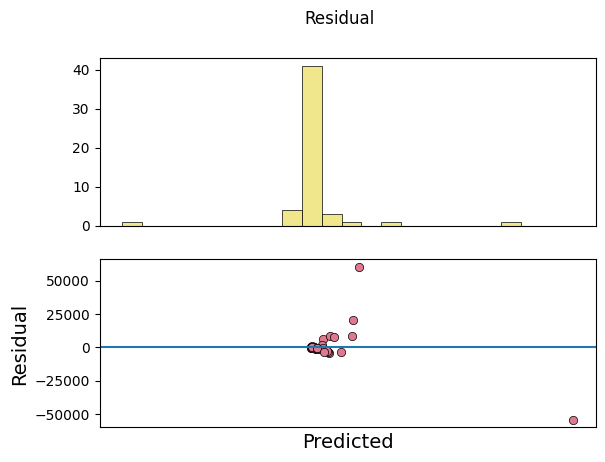

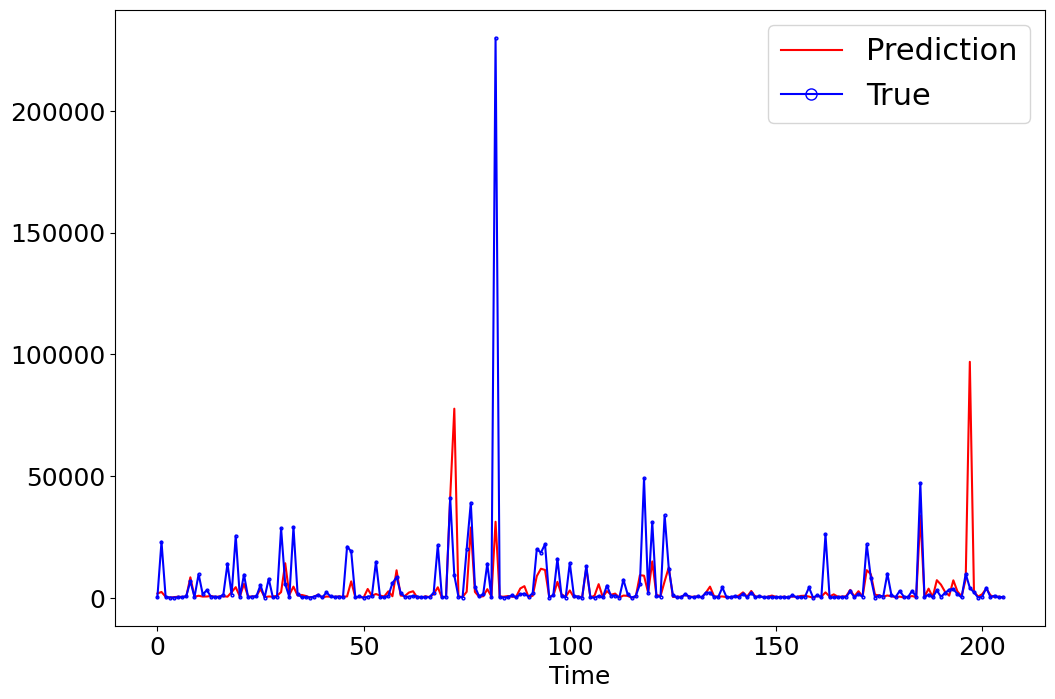

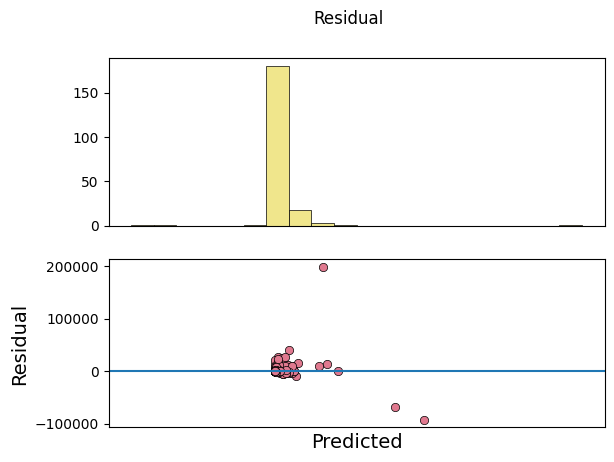

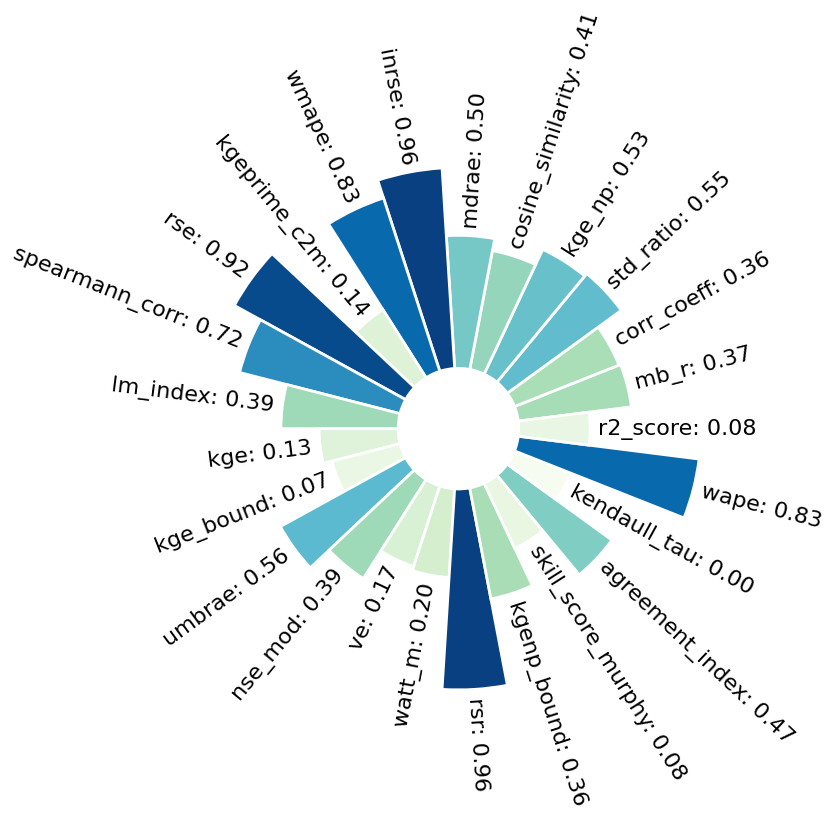

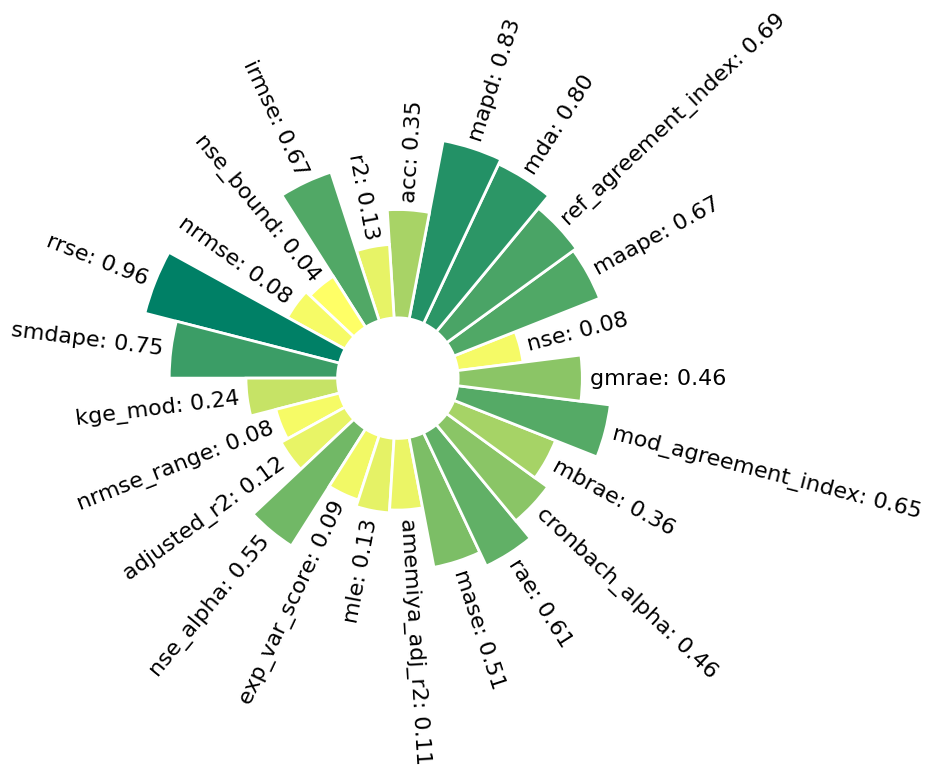

[15]:

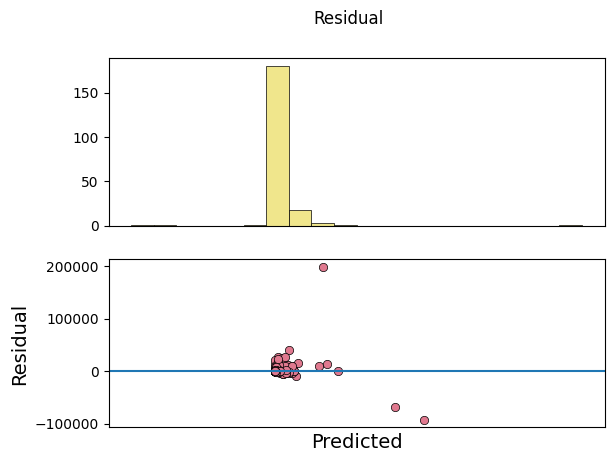

p = model.predict(x=train_x, y=train_y, plots=['residual'], verbose=0)

[12]:

t, p = model.predict_on_all_data(data=data, return_true=True, process_results=False)

t.shape, p.shape

********** Removing Examples with nan in labels **********

***** Training *****

input_x shape: (206, 20, 8)

target shape: (206, 1)

********** Removing Examples with nan in labels **********

***** Validation *****

input_x shape: (52, 20, 8)

target shape: (52, 1)

***** Test *****

input_x shape: (0,)

target shape: (0,)

9/9 [==============================] - 0s 1ms/step

[12]:

((258, 1), (258, 1))

[ ]: